Which subreddit deletes the most comment? Is there censorship?

If you've been a long time reddit user you've probably realized that some subreddits are filled with deleted comments. Out of curiosity, I decided to do a quick analysis on just how many comments are deleted. To get started let's look at the result.

Result

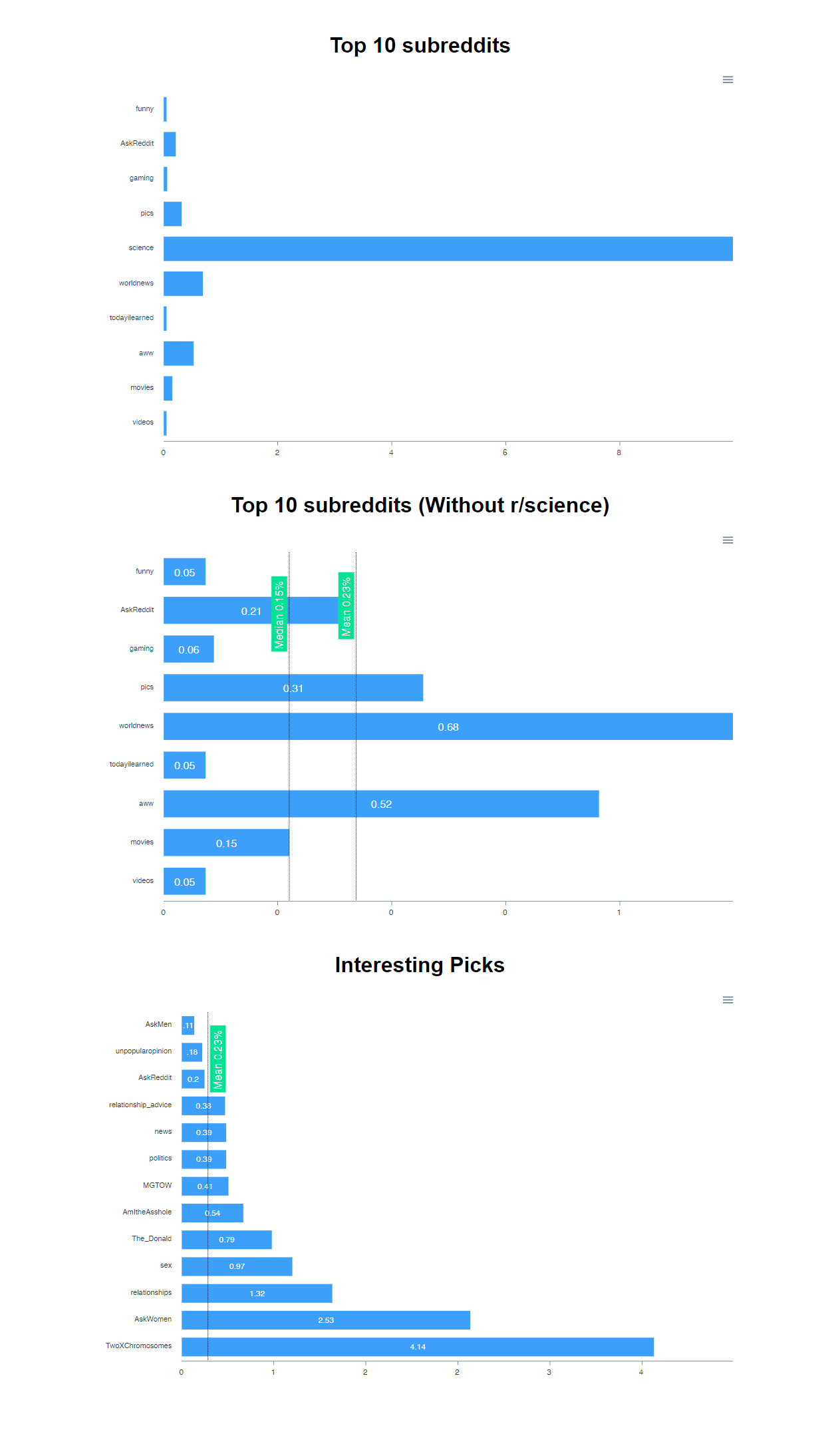

Looking at the top 10 subreddit gives us a good baseline for how many comments are usually deleted because of the larger sample size they provide. Interestingly r/science has an absurd amount of deleted comments. In the second chart we see that the average amount of deleted comments is 0.23% without r/science. That number will be useful for the next chart.

In the last chart I picked some interesting subreddits to study. The green line shows the average amount that was taken from the previous chart. Something I noticed is that style of moderating is a bigger influence on the deletion than the nature of the sub. For example we can see AskMen (0.11%) vs AskReddit (0.2%) vs AskWomen (2.53%), and relationship_advice (0.38%) vs relationships (1.32%).

Methodology

All data were pulled from the top 30 posts in each subreddit over 7 days in an ad-hoc manner. All were done at least 24 hours apart to prevent any overlap.

Source Code

I'm releasing the source code so everyone can perform their own analysis. It's written in JavaScript and needs to be run in the browser on reddit. The main functions to run are right at the bottom.

function getPosts(subreddit, callback) {

fetch('https://gateway.reddit.com/desktopapi/v1/subreddits/' + subreddit + '?&sort=hot')

.then(response => {

return response.json()

})

.then(data => {

let ids = Object.keys(data.posts)

callback(ids)

})

.catch(err => {

console.error(err)

})

}

function getComments(id, callback) {

fetch('https://gateway.reddit.com/desktopapi/v1/postcomments/' + id + '?sort=top&depth=100&limit=100000')

.then(response => {

return response.json()

})

.then(data => {

let stats = {

total: 0,

deleted: 0

}

for (let id in data.comments) {

let comment = data.comments[id]

stats.total++

if (comment.deletedBy == 'moderator') {

stats.deleted++

}

}

callback(stats)

})

.catch(err => {

console.error(err)

})

}

function analyze(subreddit, callback) {

getPosts(subreddit, ids => {

var total = 0,

deleted = 0,

counted = 0

for (let id of ids) {

getComments(id, stats => {

total += stats.total

deleted += stats.deleted

counted++

if (counted == ids.length) {

callback(subreddit, deleted, total);

}

})

}

})

}

function analyzeToJSON(subs) {

var dict = {}

function loop(i) {

if (i >= subs.length) {

console.log(JSON.stringify(dict));

return

}

analyze(subs[i], (sub, deleted, total) => {

dict[sub] = {

deleted: deleted,

total: total

}

loop(++i)

})

}

loop(0)

}

function analyzeToCSV(subs) {

let csv = ''

function loop(i) {

if (i >= subs.length) {

console.log(csv);

return

}

analyze(subs[i], (sub, deleted, total) => {

csv += `${sub},${deleted},${total},${Math.round(deleted / total * 10000, 2) / 100}%\n`;

loop(++i)

})

}

csv += 'sub,deleted,total,percent\n';

loop(0)

}

function compile(entries) {

let results = {}

for (let entry of entries) {

for (let sub in entry) {

if (!results[sub]) {

results[sub] = {

deleted: 0,

total: 0,

}

}

let r = results[sub]

let e = entry[sub]

r.deleted += e.deleted

r.total += e.total

}

}

for (let sub in results) {

let r = results[sub]

r.percent = r.deleted / r.total

}

return results

}

function getMean(entries) {

let total = 0,

count = 0

for (let sub in entries) {

total += entries[sub].percent

count++

}

return total / count

}

function getMedian(entries) {

let list = []

for (let sub in entries) {

list.push(entries[sub].percent)

}

list.sort((a, b) => a - b)

if (list.length % 2 === 0) {

let a = list.length / 2,

b = a - 1

return (list[a] + list[b]) / 2

} else {

return list[(list.length - 1) / 2]

}

}

function getStdDiv(entries) {

let list = []

let mean = getMean(entries)

for (let sub in entries) {

let d = entries[sub].percent - mean

list.push(Math.sqrt(d * d))

}

return list.reduce((accumulator, currentValue) => accumulator + currentValue) / list.length

}

function getCSV(data) {

let csv = 'sub,deleted,total,percent\n'

for (let sub in data) {

let r = data[sub]

csv += `${sub},${r.deleted},${r.total},${Math.round(r.percent * 10000, 2) / 100}%\n`;

}

return csv

}

function formatPercent(i) {

return Math.round(i * 10000, 2) / 100 + '%'

}

function report(entries) {

const percents = Object.values(entries).map(e => e.percent)

const max = formatPercent(Math.max.apply(null, percents))

const min = formatPercent(Math.min.apply(null, percents))

console.log(`Mean: ${formatPercent(getMean(entries))}, Median: ${formatPercent(getMedian(entries))}, Standard Deviation: ${formatPercent(getStdDiv(entries))}, Min: ${min}, Max: ${max}`);

console.log(getCSV(entries));

}

var presets = {

top10: [

'funny', 'AskReddit', 'gaming', 'pics', 'science', 'worldnews', 'todayilearned', 'aww', 'movies', 'videos'

],

custom: [

'AskReddit', 'AskMen', 'AskWomen', 'TwoXChromosomes', 'sex', 'relationships', 'relationship_advice',

'unpopularopinion', 'news', 'politics', 'The_Donald', 'MGTOW', 'AmItheAsshole',

],

}

var results = {

top10: [{

"funny": {

"deleted": 3,

"total": 5053

},

"AskReddit": {

"deleted": 34,

"total": 8687

},

"gaming": {

"deleted": 2,

"total": 3954

},

"pics": {

"deleted": 4,

"total": 3691

},

"science": {

"deleted": 195,

"total": 2786

},

"worldnews": {

"deleted": 30,

"total": 5580

},

"todayilearned": {

"deleted": 1,

"total": 4889

},

"aww": {

"deleted": 5,

"total": 3133

},

"movies": {

"deleted": 10,

"total": 5129

},

"videos": {

"deleted": 1,

"total": 4038

}

}, // add more results here

],

custom: [{

"AskReddit": {

"deleted": 34,

"total": 8723

},

"AskMen": {

"deleted": 0,

"total": 1626

},

"AskWomen": {

"deleted": 37,

"total": 2351

},

"TwoXChromosomes": {

"deleted": 67,

"total": 1872

},

"sex": {

"deleted": 9,

"total": 549

},

"relationships": {

"deleted": 12,

"total": 1276

},

"relationship_advice": {

"deleted": 12,

"total": 2684

},

"unpopularopinion": {

"deleted": 5,

"total": 2233

},

"news": {

"deleted": 20,

"total": 3252

},

"politics": {

"deleted": 30,

"total": 7723

},

"The_Donald": {

"deleted": 21,

"total": 3069

},

"MGTOW": {

"deleted": 1,

"total": 850

},

"AmItheAsshole": {

"deleted": 30,

"total": 8131

}

}, // add more results here

],

}

// analyzeToJSON(presets.top10)

// analyzeToCSV(presets.custom)

// report(compile(results.top10))

// report(compile(results.custom))